Apple’s Camera Magic “Deep Fusion”

What Google’s Pixel Phones have taught us is that not only hardware matters for good photography but its the software magic that is also necessary. A.I. or artificial intelligence is the new buzz word and it has bitten every manufacturer resulting in both hardware and software integration in every new phone.

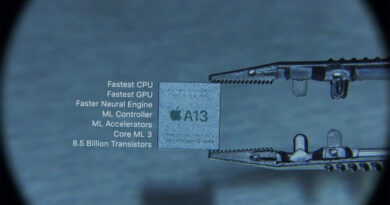

Apple joins this fray with Neural Engine Hardware in their latest iPhones chip i.e. A13 Bionic. The software part for photography is handled by Apple’s new software called “Deep Fusion”

Phil Schiller, Apple’s chief head of marketing has explained this single teaser picture

The above image shows that through machine learning the output has a stunning amount of detail, with great dynamic range and with very low noise

Here is how Deep Fusion works:

It shoots a total of 9 images. The mobile shoots 4 short images and 4 secondary images even before the shutter button is pressed. When you press the shutter button, it takes 1 long exposure photo. Then the Neural Engine analyzes the fused combination of long and short images, picking the best among them, selecting all the pixel one by one, going through 24 million pixels to optimize for detail and low noise

- Titan Fall(ter): Intel’s Stumble an Analysis - August 5, 2024

- AMDs Radeon Future Looks Bleak After RDNA 5 - May 1, 2024

- Kinect 2- Right time to use A.I. in Nextgen Consoles? - April 30, 2024