Get in Sync for Tear & Lag Free Gaming- G-Sync, FreeSync, V-Sync & HDMI VRR Explained

Every PC gamer with salt of the earth must be knowing about the option of Vsync inside the game settings menu and every High specced gamer but be knowing the pain of screen tearing while playing the game. So why do we get tearing at all and what are the solutions proposed by manufacturers? Let’s find out in our latest episode of insight (we really need a new name here) where we will look into the issue and their solutions along with the marketing jargon and technological jargon explained.

What is Screen Tearing?

It’s a visual artifact which occurs when information of two or more frames are displayed on a single screen. If we look at the image below, we can see that on the monitor there are two frames being displayed, one frame ahead of another and thus there is a tear in between due to this. The frame above the tear is frame 1 and the image below the tear is the next frame i.e. frame 2.

Why does it occur?

Now that we know how the tearing looks like, now lets see why does it happen at all.

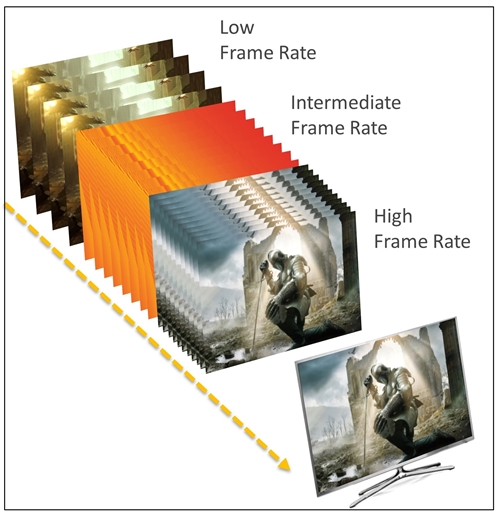

Now looking at the example above, it is clear that GPU is throwing frames which is not correctly syncing with monitor and hence the tear. Monitors or any display device have their own refresh rate. One frame is displayed followed by next. So a Static 60Hz display can show 60 frames per second, all the time. Now the GPU or video output units also generate images and sends them to the display at their own pace. Lets say that the GPU is throwing 100 Frames per Second and the monitor is capable of 60FPS only. Thus the monitor and GPU are not able to sync with each other and as a result multiframes thrown by GPU which gets displayed on the monitor in single frame. This results in tearing.

How to solve it?

By the above example, it looks like solving the problem is as simple as syncing frames from monitors and GPU. But there are just multiple solutions with pros and cons of their own. Every manufacturer is stepping up with its own solution which includes AMD’s Freesync, Nvidia’s G-Sync, Apple’s Promotion and Qualcomm’s Q-Sync. For this article, we will cover AMD, Nvidia, and other open standards i.e. Vsync and VRR.

What is V-Sync?

Vertical Syncrnisation or vsync addresses the tearing issue by doing a simple thing, forcing the GPU to sync with the monitor’s refresh rate. Thus for example, a GPU that wants to throw 100FPS is forced to match 60Hz of the monitors and is limited to 60FPS. This, however, causes other issues. The GPU remains under utilised. Your Shiny GTX 2080Ti which be useless if the monitor you have is limited to say 60Hz. This also does not solve the case where your poor GPU might display less frames than monitor and that may cause stutter. This also brings in input lag where input from keyboard and mouse is registered a bit late which quite sad for First Person Shooter lovers as they need that precision.

What is G-Sync?

Nvidia looked at the issue in hand brought its own technology to address this issue. It was called G-sync and this brought a revolution in the industry. Gamers who used this swear that once you go gsync, you can’t go back.

G-Sync tried to do the opposite of V-Sync. Unlike vsync where GPU was forced to sync with the monitor, here Monitor is forced to sync with the GPU. But how? These monitors have variable refresh rates, i.e. unlike monitors with static refresh rates like 60Hz all the time, these have a range of refresh rates like 30 to 144Hz. This if the GPU is able to output 100FPS, The monitor syncs the refresh rate to 100Hz. Similarly if in games there is more GPU intensive segment and output reduces to 30FPS, the monitor adapts and goes to 30Hz mode.

Now although Nvidia was a pioneer with this technology, it has its own issue. First the vendor lockdown. You need to have a G-Sync Monitor and Nvidia GPU to use this feature. AMD and Intel users were left in dust. G-sync is a proprietary standard and required a special chip inside the monitors and validation from Nvidia. This increases the cost of the monitor. Not to mention you cant switch to AMD in the future.

The technology also limited itself to DisplayPort initially but now with second gen it also supports HDMI.

What is Free-Sync?

Not to be left behind, AMD entered the world of variable refresh rate by introducing Free-Sync. As the name implied, this standard was free to be implemented. Its nature is similar to what Nvidia was able to do with G-Sync. Free-Sync forces the monitor’s refresh rate to match with GPUs output. The monitor has a range of refresh rates instead of static refresh rate and that is synced with GPU. Unless your GPU throws frames that are being the range of monitors’ variable refresh rate, everything remains silky smooth. AMD’s implementation had certain advantages compares to Nvidia.

The standard was based on VESA and was free to use. No royalty was charged from manufacturers. Thus Freesync monitors are cheaper to produce. The total cost of ownership reduced i.e. AMD GPU + Freesync compared to equivalent Nvidia GPU + G-Sync Monitor. Since this is based on open standard and thus it was open to every GPU vendor to get it implemented.

Which Nvidia did for limited monitors as announced CES 2019.

However, This largely remained AMD only technology resulting in another vendor lock-in the issue. The quality of monitors also ranged from low to high making selection a tad more difficult.

In 2017 AMD announced FreeSync 2 with HDR and HDMI support. This helped in getting the technology in PS4 Pro and Xbox One X.

HDMI 2.1 VRR Support

With the above solutions, it was clear that the standards remained vendor-specific and adoption remained largely GPU specific. This is due to that although AMD had the open standard, Nvidia had the upper hand in GPU market share. Thus freesync had limited penetration.

This all set to change as HDMI consortium announced New HDMI 2.1 standard. This standard also introduced support for the variable refresh rate. This again works in the same way as G-Sync and FreeSync where monitor having multiple refresh rate support is adjusted with the GPUs refresh rate. Thus in all essence, if the monitor is advertised as HDMI 2.1 ready, then the monitor is also VRR ready. The monitor need not require an extra proprietary chip, also the standard is universal and is open to everyone. this no extra cost is required to bake the feature in.

This works on standard HDMI cable (which supports HDMI 2.1 duh!) and hence reduced the headache of supporting display port or any other port for that matter.

This opens up the goodness of VRR to every gamer in the world be it PC or console gamers and we are sure that the Next Colsoles i.e. PS5 and Xbox Next will have 8K support and VRR support baked in and the TVs which will have HDMI 2.1 support by that time will offer VRR goodness for free.

- AMDs Radeon Future Looks Bleak After RDNA 5 - May 1, 2024

- Kinect 2- Right time to use A.I. in Nextgen Consoles? - April 30, 2024

- Blender 3D Jumps to Version 3.1 with Massive Changes Baked In - March 10, 2022

Running an HDMI cable through a wall over a short distance of 10 feet or less should be just fine as long as the HDMI cable is CL-rated. But for longer runs.

If computer hardware knowledge isn’t one of your strengths and you really need a new HDMI cable, this guide should be just what you need to find the best possible one for your needs.

You’re so interesting! I do not think I have read through something like

this before. So great to discover somebody with a few

original thoughts on this subject. Really.. thanks for starting this

up. This site is one thing that’s needed on the web,

someone with some originality!